We live in an era where the volume of data grows exponentially, and the problems we aim to solve are increasingly complex. From simulating chemical reactions to optimizing global logistics routes, classical computing — based on bits that can be either 0 or 1 — is reaching its physical and mathematical limits.

For decades, silicon processors have followed Moore’s Law, but we’re now approaching a point where shrinking transistors further no longer translates into more power. This raises an unavoidable question: how can we continue to evolve if classical computers are hitting their ceiling?

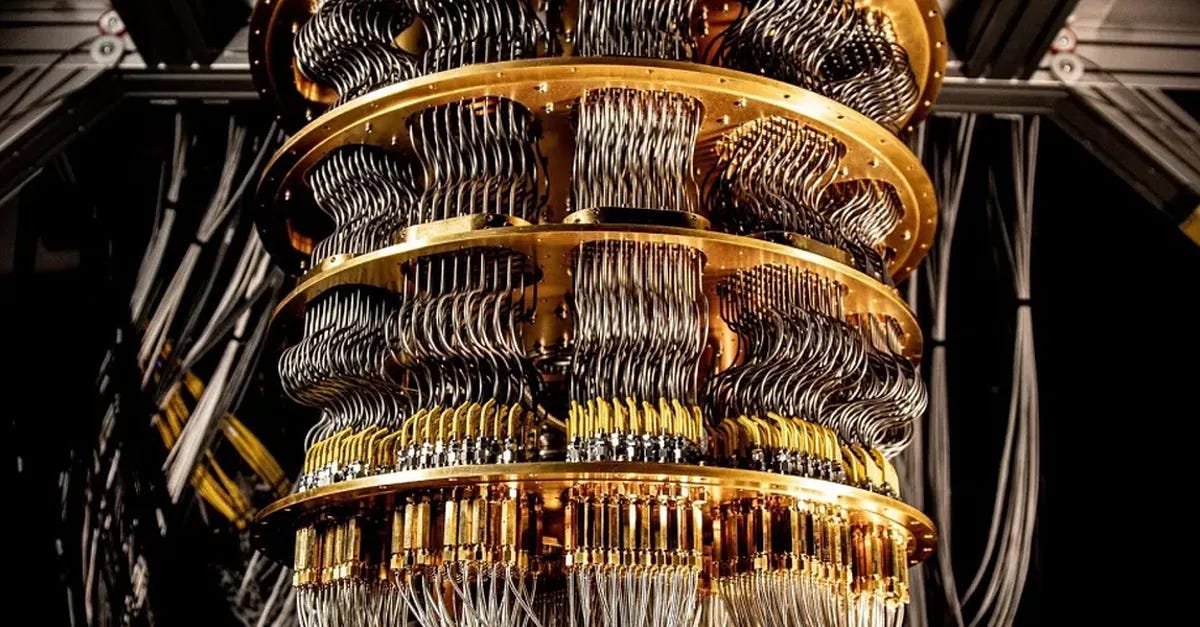

That’s where quantum computing comes in — an entirely new approach that leverages the laws of quantum physics to process information in a fundamentally different way. But what does that really mean, and how does it actually work?

How people tend to solve it: inabitable limits

When facing highly complex problems — such as predicting molecular interactions for a new drug or training massive AI models — the common strategy is to scale up classical computing power: more CPUs, more GPUs, larger clusters, and better parallelization algorithms.

This approach works to an extent, but it has inevitable limits:

Physical limit: transistor miniaturization is approaching atomic scale.

Energy consumption: high-performance computing requires massive amounts of electricity.

Exponential time: some problems grow so complex that even supercomputers would take thousands of years to solve them.

These restrictions reveal a simple truth: for certain tasks, classical computing will never be enough — and that’s where the quantum world offers a new paradigm.

How it should be automated: new paradigm based

Quantum computing introduces a new paradigm based on qubits, the fundamental unit of quantum information.

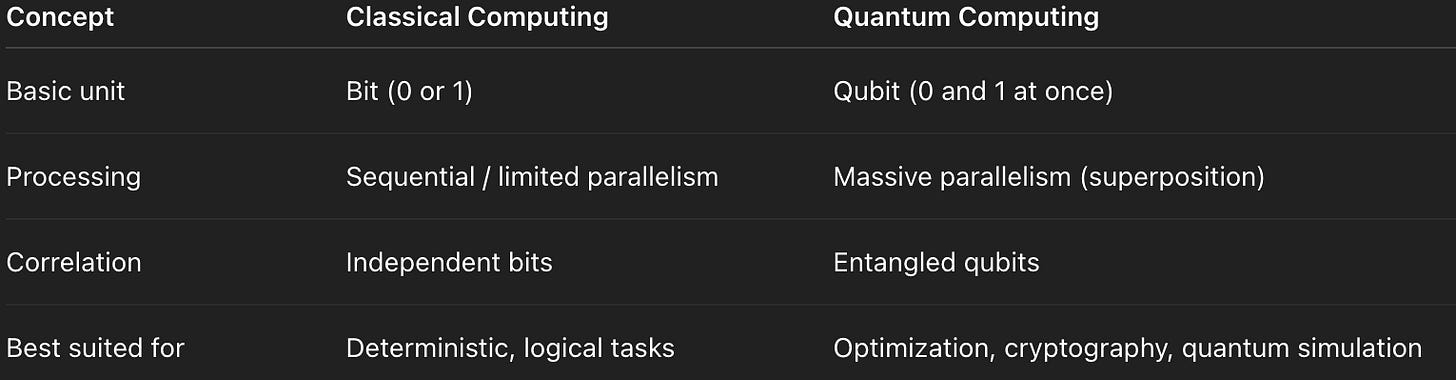

While a classical bit can represent only 0 or 1, a qubit can represent 0 and 1 at the same time, thanks to a phenomenon called superposition.

But that’s not all — qubits can also become entangled, meaning the state of one qubit is directly correlated with the state of another, even if they are physically separated. This phenomenon, known as entanglement, allows computations to occur across multiple states simultaneously, vastly increasing computational capacity.

In simple terms:

With these principles, quantum algorithms such as Shor’s algorithm (for integer factorization) and Grover’s algorithm (for database search) have shown enormous theoretical advantages over their classical counterparts.

In practice, we are still in what’s known as the NISQ era (Noisy Intermediate-Scale Quantum), where quantum processors are small and error-prone. Yet even these early systems are beginning to solve specialized problems that show quantum advantage.

In this sense, “automation” doesn’t just mean using faster hardware — it means rethinking how information itself is processed, adopting models rooted in probability, interference, and quantum correlation.

Conclusion

Quantum computing is not simply an evolution of classical computing — it is a conceptual revolution. While classical systems rely on binary logic and deterministic circuits, quantum systems exploit the deepest principles of nature: superposition, entanglement, and interference.

Put simply, quantum computers explore a “universe of possibilities,” processing many potential solutions at once before “collapsing” to the most probable answer.

They don’t replace classical computers but rather complement and expand their capabilities — particularly in areas such as:

Cryptography and information security

Molecular and materials simulation

Financial and logistical optimization

Artificial intelligence and machine learning

In other words, quantum computing is humanity’s next step toward understanding and manipulating information at its most fundamental level — the quantum level.

References

Nielsen, M. A., & Chuang, I. L. (2010). Quantum Computation and Quantum Information. Cambridge University Press.

Preskill, J. (2018). Quantum Computing in the NISQ Era and Beyond. Quantum, 2, 79.

Arute, F. et al. (2019). Quantum Supremacy Using a Programmable Superconducting Processor. Nature, 574(7779), 505–510.

IBM Quantum. (2024). The Quantum Decade: IBM’s Vision for the Future of Quantum Computing. Available at: https://www.ibm.com/quantum

Google Quantum AI. (2023). Quantum Computing Milestones and the Path Ahead. Available at: https://quantumai.google